What is Child-View?

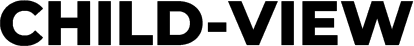

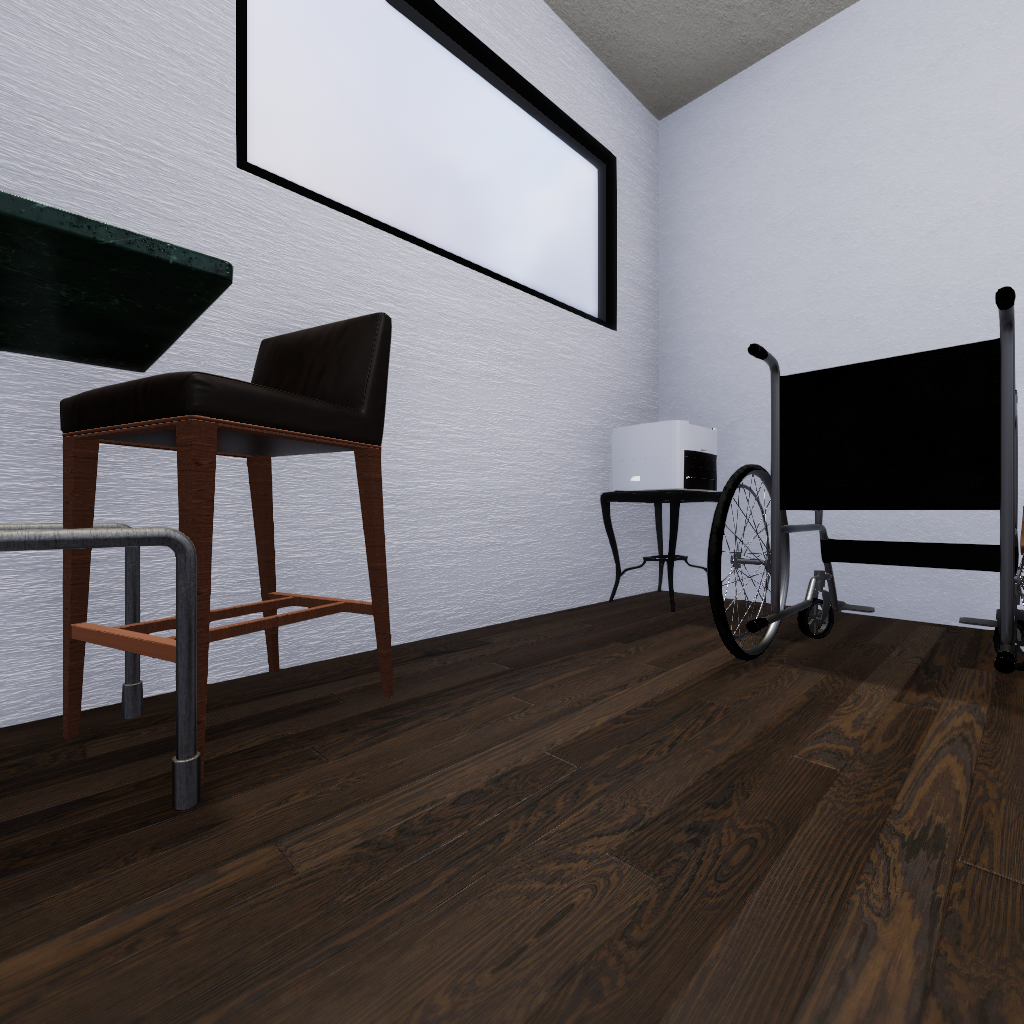

A visualization of the various viewpoints of a house in child-view obtained through navigating the virtual environment. Note that similar objects can appear in different shapes and sizes based on the distance and viewing angle of the agent.

Many visual learning databases consist of millions if not billions of distinct images which are used to train algorithms and models. In fact, the largest databases far exceed the standard visual exposure of a typical human toddler (~100 million fixations by age 2; Recht et al., 2019). However, when using these databases with the most advanced algorithms, visual deep-learning efforts still fall short of human visual performance on simple classification tasks (Recht et al., 2019). Making datasets even larger may improve performance, but there are diminishing returns with increasing training size. What is more, training on billions of images is outside of the reach of small and mid-tier research programs, which limits the ability of both industry and the scholarly community to develop better algorithms. One reason for the diminishing returns is that these large data sets have no pattern of connection or similarity between images and thus lack a crucial ingredient that real-world visual learners enjoy: environmental consistency.

In Child-view, we aim to combat this inefficiency by shifting the focus of deep learning toward optimizing what learning can be accomplished with small data sets that are closer in scope and size to the visual experience of a human child’s first years of life. There is much insight to be gained about visual learning from children, who tap into a rich source of environmental information about how their body deliberately samples information through controlled bodily orienting, continuous sensory processing, and interactions with the world (Ballard et al 1997; Campos et al. 2000; Smith 2005).

This method of exploration enables children to develop a robust model of the visual world despite inhabiting an environment with limited distributions. Many children in modern households spend nearly the first two years of their life inside one or two buildings, viewing a limited set of spaces, surfaces, faces, and objects but from many directions and under a wide variety of lighting conditions. In fact, headcam data reveals that only three specific faces make up nearly all of the exposure to faces for the average child from western households in the first year of their life (Jayaraman, et al. 2015).

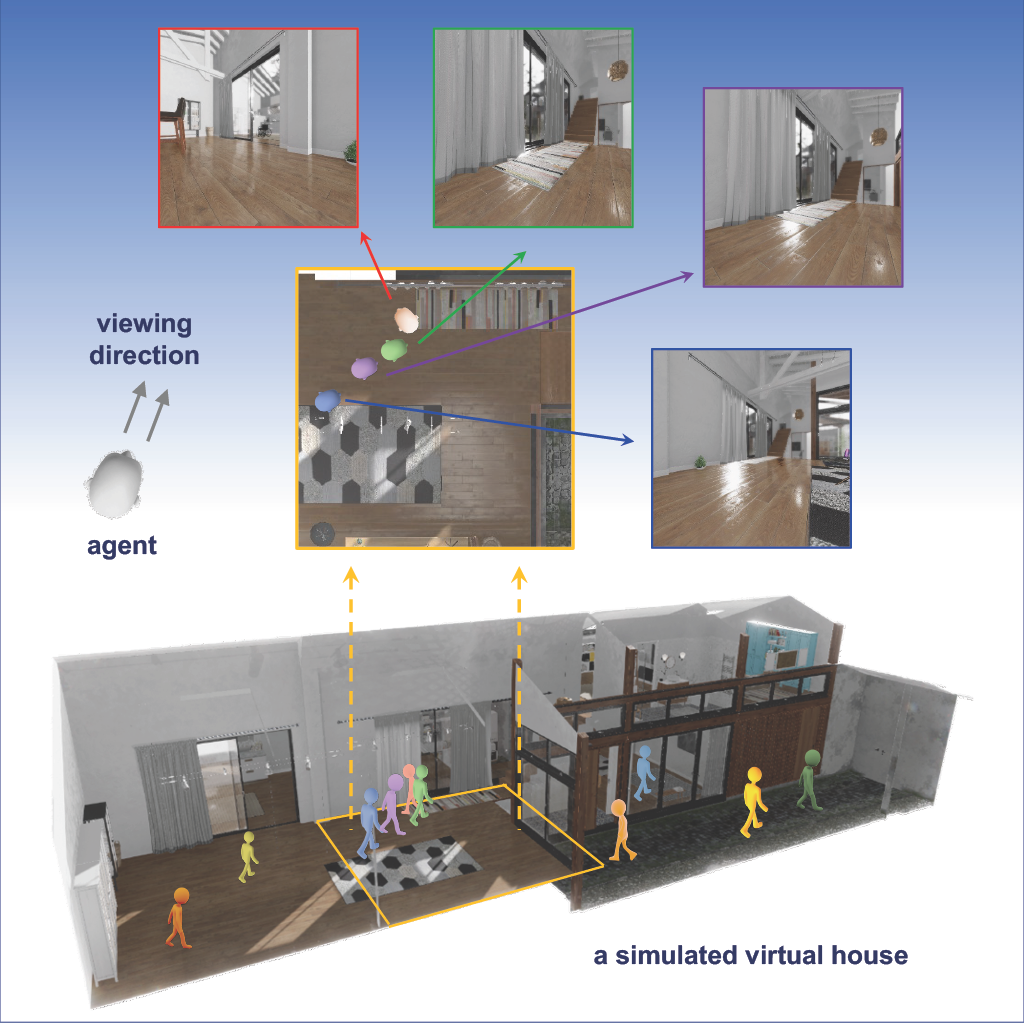

How do people learn with such input? It could be that perceiving within a consistent environment, in which objects persist over time and through a variety of changes in perspective, lighting condition, etc, form richer learning environments than large image sets. The ways that light is reflected, absorbed, refracted, or scattered in the physical world provides a richer set of augmentations that are based on the laws of physics. Augmentation of 2-D images cannot capture these changes because they lack the physical properties of interacting with light rays in a three-dimensional space (real or virtual).

It has been demonstrated that the ability to learn spatially invariant visual representations of objects is dependent on the temporal adjacency of views during an eye movement (Li & Dicarlo 2008) or exposure to temporally smooth objects (Wood & Wood 2018) which would allow the visual stream to learn that different views correspond to the same object. Child-view extends this idea to movement through an environment, which extends the transformations to include changes in lighting across time and changes in perspective within a scene that can teach the learner about shadows and occlusion. The viewer uses their own perception of self-motion and time as a means to sort sensory information according to where and when it was sampled. This environmental learning process turns the statistical consistency of the world, which would be a disadvantage from a standard deep learning approach, into a crucial signal for learning about the physical properties of how light and materials interact in a fully populated visual environment.

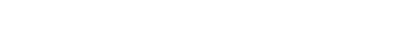

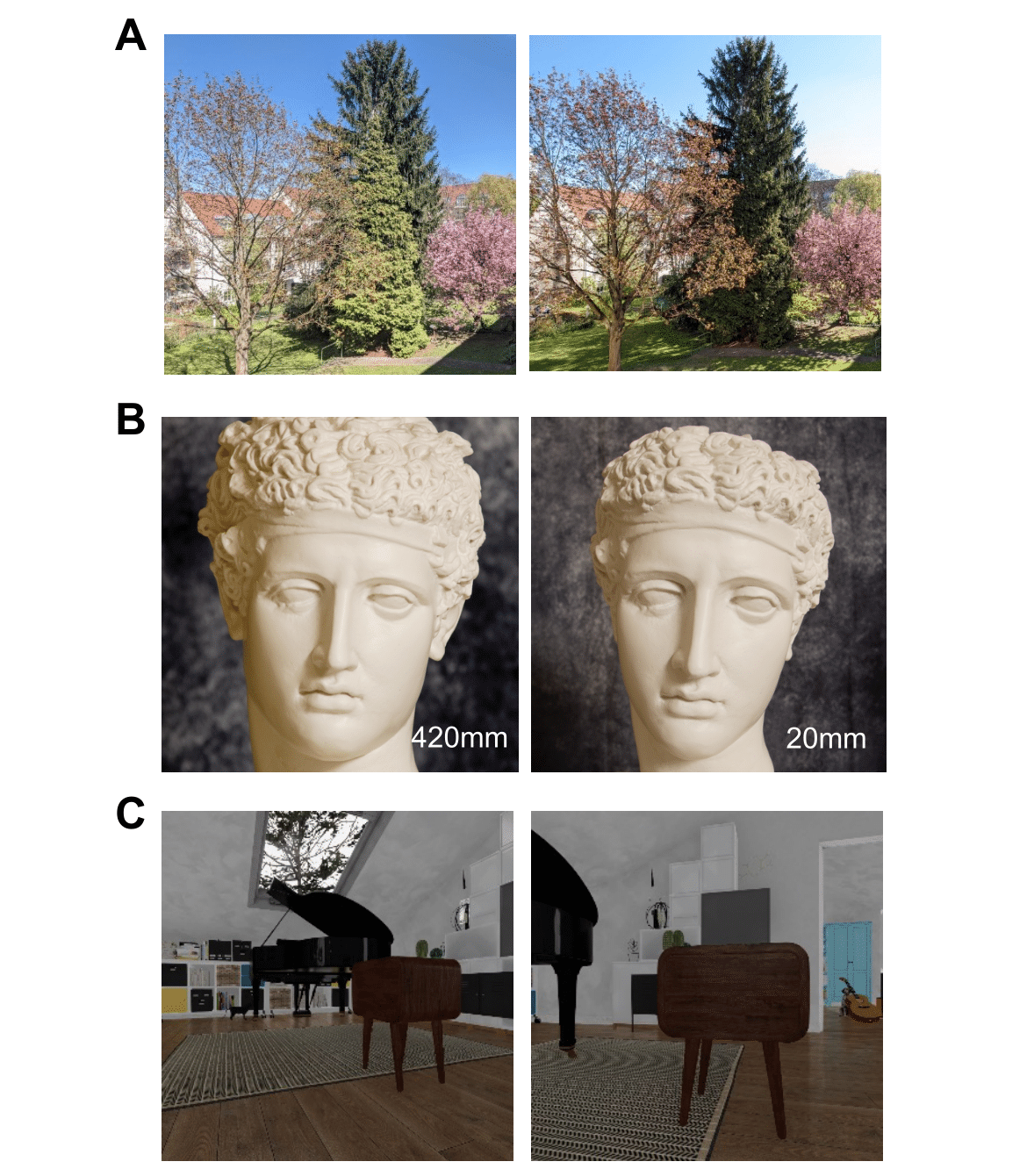

The impact of lighting, camera distance/focal length, and position on the appearance of an image

Given a large set of images with spatial and temporal contiguity information, a self-supervised contrastive learning approach could use an objective function that relies on the spatiotemporal proximity of two images as a graded similarity signal of any two views. Our self-supervised algorithm assumes that training uses temporal and spatial contiguity as a proxy for similarity, rather than assessing similarity of the pixelwise content.

Using space and time allows us to tag images as similar regardless of their apparent content. Instead of attempting to discern whether two images are augmented versions of the same original image, the objective function maximizes a new contrastive function according to the relative temporal and spatial separation between a key image and other images sampled from the environment. Both space and time provide partially independent sources of similarity that augment the learning process.

The image sets we provide here will facilitate the development of new approaches to computer vision that allow us to learn more effectively with smaller datasets rather than building exhaustively large datasets. All images provided were generated from the ThreeDWorlds engine. You can also generate your own images by following a step-by-step tutorial provided in this website.

The Benefits of Child-View

Psychological Principles

Child-View is inspired by principles of Cognitive Development, thereby using the process of visual learning in infants to inform the development of human-inspired visual learning datasets. This should ultimately enhance the classification capability of deep-learning algorithms.

Environments

Environmental simulation through ThreeDWorld provides an approximation of the physics of light permitting us to precisely vary small details of lighting while keeping the environment the same. This allows for fine-grained experiments in visual learning, exploring the role of factors such as illuminant variation, shadows, and binocular vision while keeping the physical environment unchanged.

Accessibility

Modern visual learning usually relies on massive databases, which actually exceed the level of visual information a typical human toddler experiences. The very large sets are inaccessible to for small research groups, both by being proprietary but also in terms of sheer size for storage and computation. Developing new approaches to machine vision with smaller datasets makes it easier to find new ideas quickly and also allows a greater diversity of labs to participate in research.

Building your own child-view database is easy!

This section will be prepared shortly.

Want to make your own egocentric database in a TDW virtual environment to further improve visual deep learning?

Follow our step-by-step guide to build your own egocentric dataset in a TDW virtual environment so you can begin to investigate your own visual deep learning questions!